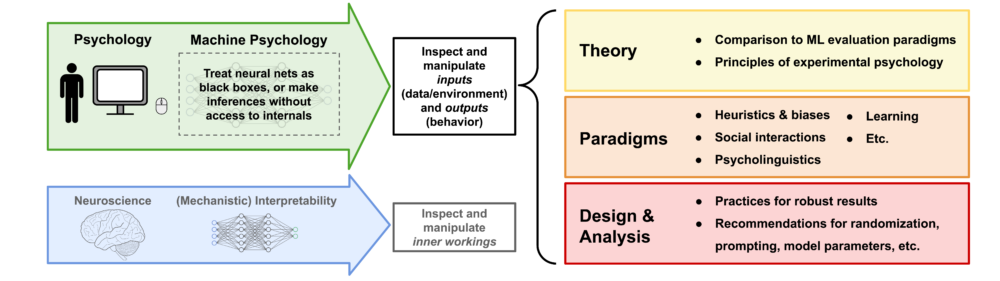

In 2023, I published a paper on machine psychology, which explores the concept of treating LLMs as participants in psychological experiments to explore their behavior. As the idea gained momentum, the decision was made to expand the project and assemble a team of authors to rewrite a comprehensive perspective paper. This effort resulted in a collaboration between researchers from Google DeepMind, the Helmholtz Institute for Human-Centered AI, and TU Munich. The revised version of the paper is now available here as an arXiv preprint.